반응형

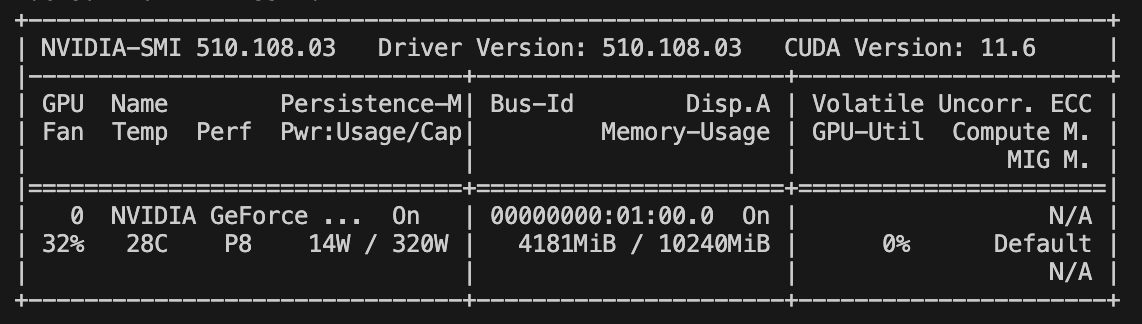

1. 설치 상태 확인

nvidia-sminvidia 드라이버 상태, 디바이스 상태 등을 확인

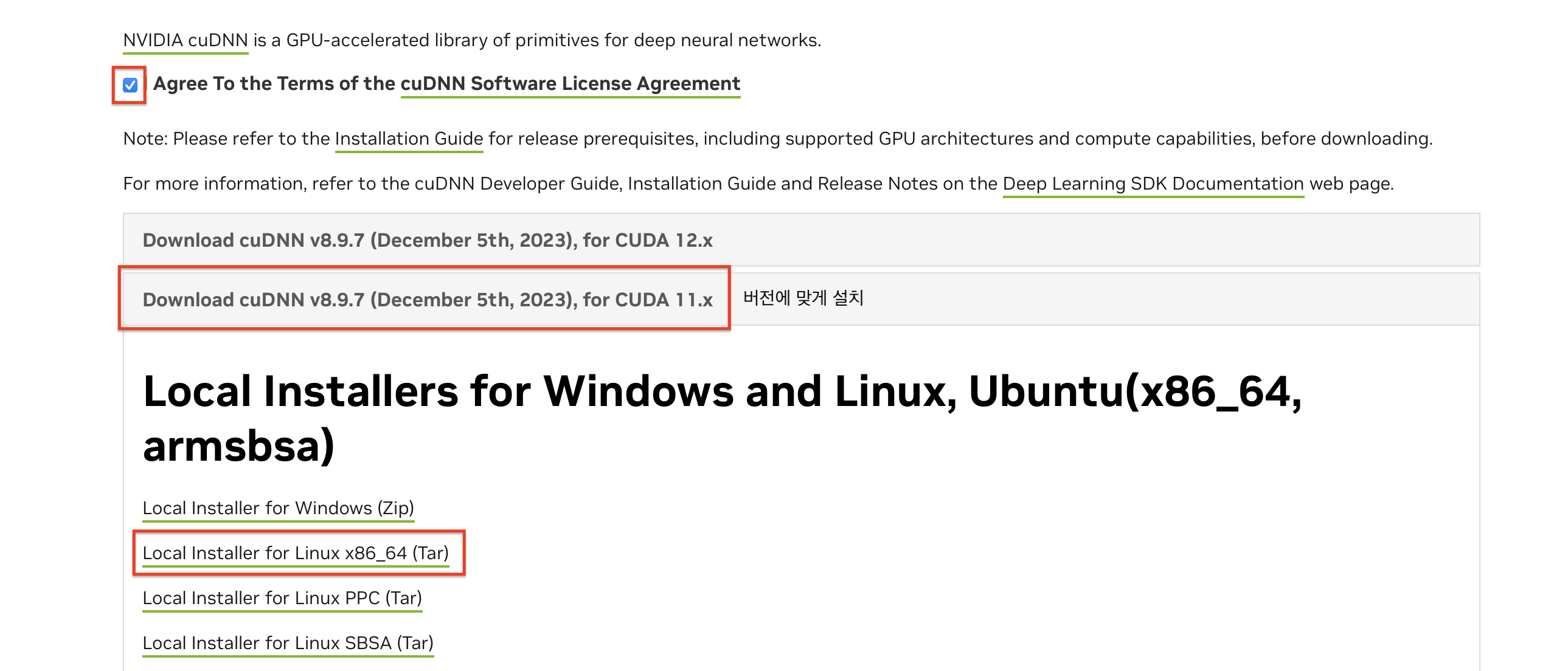

2. cuDNN 다운로드

cuDNN 사이트에서 로그인 후, 위의 CUDA 버전에 맞는 cuDNN을 다운로드 받는다.

https://developer.nvidia.com/cudnn

CUDA Deep Neural Network

cuDNN provides researchers and developers with high-performance GPU acceleration.

developer.nvidia.com

3. 파일 설치

터미널에서 다운로드 된 경로로 이동(cd Downloads/) 후 압축 해제

tar -xvf cudnn-linux-x86_64-8.9.7.29_cuda11-archive.tar.xz/usr/local/cuda 디렉토리로 복사

cd cudnn-linux-x86_64-8.9.7.29_cuda11-archive

sudo cp include/cudnn* /usr/local/cuda/include

sudo cp lib/libcudnn* /usr/local/cuda/lib64

sudo chmod a+r /usr/local/cuda/include/cudnn.h /usr/local/cuda/lib64/libcudnn*cuda 디렉토리와 실제 설치된 cuda-11.7 디렉토리를 심볼릭 링크 설정

sudo ln -sf /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.9.7 /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_adv_train.so.8

sudo ln -sf /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.9.7 /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8

sudo ln -sf /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.9.7 /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8

sudo ln -sf /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.9.7 /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8

sudo ln -sf /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.9.7 /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_ops_train.so.8

sudo ln -sf /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.9.7 /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8

sudo ln -sf /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn.so.8.9.7 /usr/local/cuda-11/targets/x86_64-linux/lib/libcudnn.so.84. 설치 확인

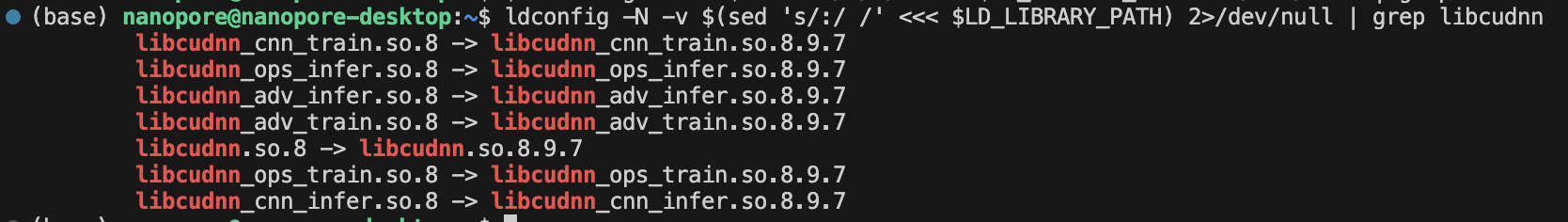

sudo ldconfig

ldconfig -N -v $(sed 's/:/ /' <<< $LD_LIBRARY_PATH) 2>/dev/null | grep libcudnn

4-1. Tensorflow에서 확인

import tensorflow as tf

gpus = tf.config.experimental.list_physical_devices('GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

print(len(gpus))실행결과 본인 컴퓨터의 gpu 갯수가 나오면 성공 !

4-2. Pytorch로 확인

import torch

# Check if GPU is available

if torch.cuda.is_available():

# Get the number of available GPUs

num_gpus = torch.cuda.device_count()

print(f"Number of available GPUs: {num_gpus}")

# Get the name of each GPU

for i in range(num_gpus):

print(f"GPU {i + 1}: {torch.cuda.get_device_name(i)}")

else:

print("No GPU available. Using CPU.")실행 결과

Number of available GPUs: 1

GPU 1: NVIDIA GeForce RTX 3080

반응형

'Computer' 카테고리의 다른 글

| vscode SSH reload 무한 새로고침 해결 (1) | 2024.04.07 |

|---|---|

| [Ubuntu18.04] Deep learning conda 환경설정 (1) | 2024.03.31 |

| Virus & Artificial Intelligence (1) | 2022.08.31 |

| Python 반복문 (1) | 2022.07.10 |

| 데이터 형식 (0) | 2022.07.06 |